Gaussian Markov Random Fields

Contents

1.3. Gaussian Markov Random Fields¶

A Markov random field or MRF is an undirected graphical model where the energy is given by the som of unary and multi-variable potentials \(\psi\):

where \(j\) ranges over the variable nodes \(x_j\) and \(c\) indexes over cliques in the graph of variables. We use \(X_c\) to denote all variables associated with clique \(c\). A typical case is a pairwise Gaussian MRF, where

the \(\psi_j(x_j)\) are quadratic potentials \(\|A_j x_j-b_j\|^2_{R_j}\) associated with measurements \(b_j\).

the \(\psi_c(X_c)=\psi_c(x_{j_1},x_{j_2})\) are pairwise potentials \(\|A_{j_1} x_{j_1}+A_{j_2} x_{j_2}-b_c\|^2_{Q_c}\) over pairwise cliques \(X_c=\{x_{j_1},x_{j_2}\}\), typically enforcing smoothness.

It will not surprise anyone, that Gaussian MRF’s can easily and advantageously be represented using Gaussian factor graphs. Indeed, we can rewrite the energy \(E(X)\) as

where the factors \(\phi_i(X_i)\) encompass both unary and binary factors, and of course we can add arbitrary arity factors as well.

Below we create a small “image “example, and show how we can solve it the same tools as the ones we used in the Kalman smoothing examples.

%pip -q install gtbook # also installs latest gtsam pre-release

Note: you may need to restart the kernel to use updated packages.

import numpy as np

import plotly.express as px

try:

import google.colab

except:

import plotly.io as pio

pio.renderers.default = "png"

import gtsam

from gtbook.display import show

from gtbook.gaussian import sample_bayes_net

from gtsam import noiseModel

1.3.1. A Small “Image” MRF¶

We first define the image dimensions and set up some keys referring to rows by a letter and columns by an integer:

M, N = 3, 4 # try playing with this

row_symbols = [chr(ord('a')+row) for row in range(M)]

keys = {(row, col): gtsam.symbol(row_symbols[row], col+1)

for row in range(M) for col in range(N)}

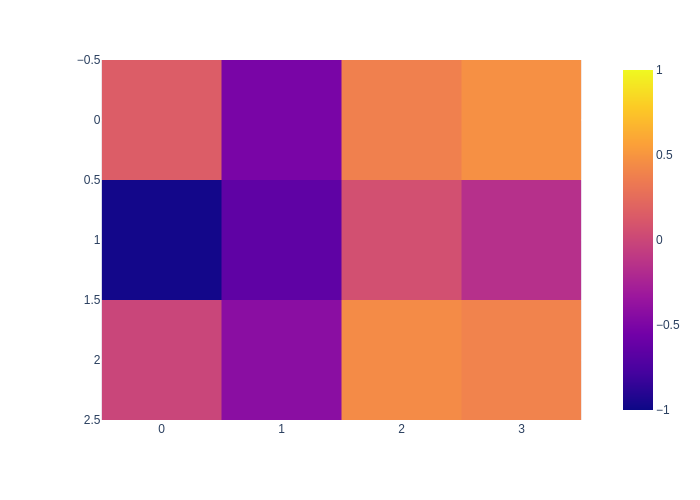

We create random data, and define a noise model for the data with the right standard deviation:

sigma = 0.5

rng = np.random.default_rng(42)

data = rng.normal(loc=0, scale=sigma, size=(M, N, 1))

data_model = noiseModel.Isotropic.Sigmas([sigma])

print(f"data_model = {data_model}")

px.imshow(data[:,:,0], zmin=-1, zmax=1)

data_model = isotropic dim=1 sigma=0.5

For smoothness, we use a different noise model:

smoothness_sigma = 0.5

smoothness_model = noiseModel.Isotropic.Sigmas([smoothness_sigma])

print(f"smoothness_model = {smoothness_model}")

smoothness_model = isotropic dim=1 sigma=0.5

Then we create the factor graph:

I = np.eye(1, 1, dtype=float)

zero = np.zeros((1,1))

graph = gtsam.GaussianFactorGraph()

for row in range(M):

for col in range(N):

# add data terms:

j = keys[(row,col)]

graph.add(j, I, np.array(data[row,col]), data_model)

# add smoothness terms:

if col>0:

j1 = keys[(row,col-1)]

graph.add(j, I, j1, -I, zero, smoothness_model)

if row>0:

j2 = keys[(row-1,col)]

graph.add(j, I, j2, -I, zero, smoothness_model)

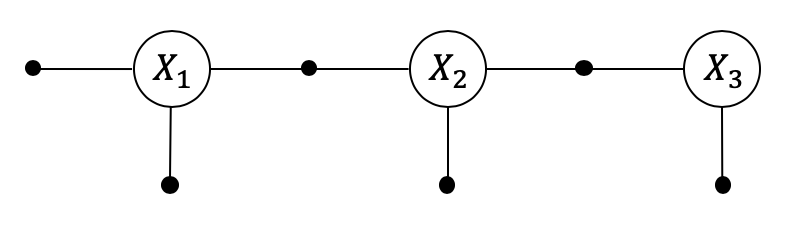

We can display the graph, although graphviz (our graph plotting engine) does not respect the “image” nature. Note that we omit the “dot” for binary factors, which both reveals the undirected MRF and is easier on the eye.

position_hints = {c:float(1-i) for i,c in enumerate(row_symbols)}

show(graph, binary_edges=True, hints=position_hints)

1.3.2. Inference using a Direct Solver¶

As in Kalman smoothing, we can use multi-frontal elimination, using a “nested dissection” ordering:

nested = gtsam.Ordering.MetisGaussianFactorGraph(graph)

nested_bayes_tree = graph.eliminateMultifrontal(nested)

show(nested_bayes_tree)

In this case, the structure of the Bayes tree reveals that (for M=3,N=4) the image is split into three regions that are separately solved.

Unfortunately, the wrapper does not support easy visualization of the “divide and conquer” approach of nested dissection, but here is an ascii image showing the first “cut”, dividing up the image into regions handled by the left, middle, and right branches, respectively:

1 2 3 4

_______

a|l l * r|

b|* * r r|

c|m m * r|

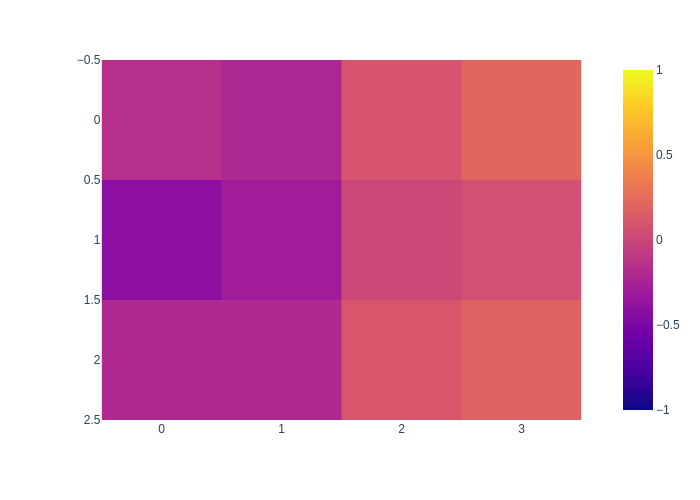

The MAP solution is obtained by back-substitution, and can be seen as a smoother version of the data:

mean = nested_bayes_tree.optimize()

px.imshow(mean.vector().reshape((M,N)), zmin=-1, zmax=1)

And of course, now ancestral sampling can also be done in parallel (here in the Bayes net):

gbn = graph.eliminateSequential(nested)

samples = sample_bayes_net(gbn, 3)

for row in range(M):

print(" ".join([str(np.round(samples[keys[(row,col)]],2)) for col in range(N)]))

[[-0.3 -0.38 -0.53]] [[ 0.23 -0.41 -0.14]] [[ 0.35 -0.04 0.72]] [[0.64 0.12 0.35]]

[[-0.22 -0.34 -0.48]] [[ 0.06 -0.42 -0.17]] [[ 0.54 -0.06 -0.05]] [[0. 0.15 0.16]]

[[ 0.61 -0.03 -0.29]] [[ 0.04 -0.25 -0.17]] [[ 0.36 -0.03 0.32]] [[0.23 0.41 0.48]]

1.3.3. Discussion¶

Direct (multi-frontal) elimination), as shown above, works like a charm in Gaussian Markov random fields with arbitrary connectivity. However, this will only scale up to large graphs when small “separators” are to be found. In particular, in image applications, the smallest separator that can be found will always be the smallest image dimension, here \(n=\min(M,N)\), and hence the root clique of the Bayes tree will have that dimension as well. When images are large, this will be prohibitively expensive, as a dense \(n \times n\) matrix will have to be factorized, and we might need to resort to different methods. Still, \(256\times256\) images for example are well within reach for exact inference.

In the next section we discuss loopy belief propagation and Gibbs sampling as alternatives.